In production environments with multi-server, multi-instance architectures, Next.js ’s caching system can present challenges. If you’re using multiple Next.js instances, it’s essential to consider how the cache behaves and whether it’s shared between instances.

What is the problem ?

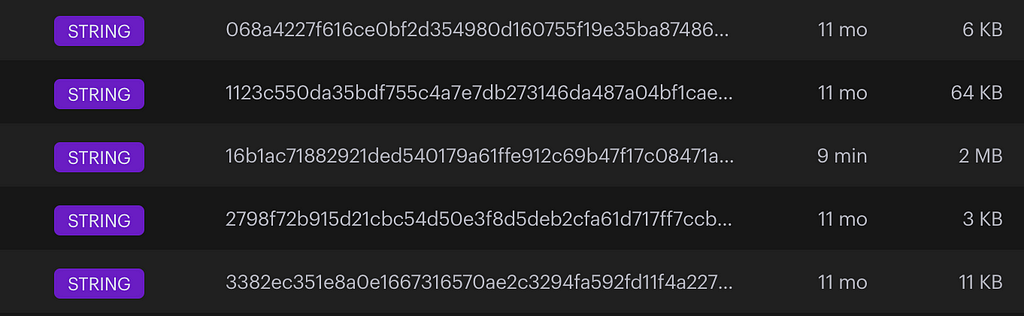

Indeed, Next.js ’s cache operates as a black box and adapts to data needs in two ways: InMemory and onFile. However, regardless of the caching method, Next.js maintains its cache for each individual instance. Unfortunately, there’s no built-in cache sharing solution. Consequently, every Next.js instance has its own cache. If you’re using data revalidation techniques like revalidateTag or revalidatePath, you’ll need to apply them separately to each instance, if feasible. Otherwise, data consistency across instances won’t be guaranteed.

Fortunately, Next.js provides a solution for custom cache handling. 🚀

https://nextjs.org/docs/app/api-reference/next-config-js/incrementalCacheHandlerPath.

You can choose to use an external caching service (e.g., Redis) or build your own in-memory cache logic. With a custom cache handler, you can share cached data across multiple Next.js instances.

Please “Redis” free us

Redis (Remote Dictionary Server) is an open-source NoSQL store that operates in-memory. It is primarily used as an application cache or a high-speed database. By storing data in memory rather than on a Solid-State Drive (SSD), Redis delivers unparalleled speed, reliability, and performance.

Redis is, therefore, an ideal candidate to take over as our cache.

To connect Next.js and Redis, we will use @neshca/cache-handler.

@neshca/cache-handler allows simplify the complex task of configuring shared cache strategies in distributed environments, such as those involving multiple and independent instances of the same application. It offers a flexible and user-friendly approach to integrating custom cache solutions and hand-crafted, pre-configured cache strategies for Redis.

The setup on the frontend is straightforward.

The requirements are:

- Next.js 13.5.1 and newer.

- Node.js 18.17.0 and newer.

- Redis Server ready for run

npm install @neshca/cache-handler redis

@neshca/cache-handler invites us to create our file cache-handler.mjs

(Here is a working example, there are several ways to use @neshca/cache-handler).

//cache-handler.mjs

import createLruHandler from '@neshca/cache-handler/local-lru'

import { createClient } from 'redis'

import { CacheHandler } from '@neshca/cache-handler'

import createRedisHandler from '@neshca/cache-handler/redis-strings'

CacheHandler.onCreation(async () => {

if (process.env.DISABLE_REDIS) {

return {

handlers: [createLruHandler()],

}

}

let client

try {

// Create a Redis client.

client = createClient({

url: process.env.REDIS_URL ?? 'redis://localhost:6379',

database: process.env.REDIS_DATABASE ?? 0,

connectTimeout: 10000,

socket: {

reconnectStrategy: function (retries) {

if (retries > 5) {

console.log('Too many attempts to reconnect. Redis connection was terminated')

return new Error('Too many retries.')

} else {

return retries * 500

}

},

},

})

client.on('error', (error) => console.error('Redis client error:', error))

} catch (error) {

console.warn('Failed to create Redis client:', error)

}

if (client) {

try {

console.info('Connecting Redis client...')

// Wait for the client to connect.

await client.connect({

timeoutMs: 1000,

})

console.info('Redis client connected.')

} catch (error) {

console.warn('Failed to connect Redis client:', error)

console.warn('Disconnecting the Redis client...')

client

.disconnect()

.then(() => {

console.info('Redis client disconnected.')

})

.catch(() => {

console.warn('Failed to quit the Redis client after failing to connect.')

})

}

}

let handler

if (client?.isReady) {

handler = await createRedisHandler({

client,

keyPrefix: 'headless_cache:',

timeoutMs: 1000,

})

} else {

// Fallback to LRU handler if Redis client is not available.

handler = createLruHandler()

console.warn('Falling back to LRU handler because Redis client is not available.')

}

return {

handlers: [handler],

}

})

export default CacheHandler

We need 2 environment variables (for example).

REDIS_URL="redis://localhost:6379"

REDIS_DATABASE=0

let’s ready to use our cacheHandler into next.config.js.

cacheMaxMemorySize: 0,

cacheHandler: process.env.NODE_ENV === 'production' ? require.resolve('./cache-handler.mjs') : undefined,

Attention: It is possible that during the build process, your Redis server may not be accessible by the Continuous Integration (CI) system. In such cases, you need to pass the environment variable DISABLE_REDIS=1 during the build or according access.

Let’s try 🚀🚀

npm run start

Our cache is now stored and read on our Redis server, and cache invalidation functions remain fully functional.

Remember that while Next.js ’s built-in cache is powerful, custom cache handling gives you more control and flexibility. Whether you’re optimizing performance, managing shared data, or handling edge cases, a custom cacheHandler is your ally! 🌟

Redis(ing) your Next.js cache was originally published in ekino-france on Medium, where people are continuing the conversation by highlighting and responding to this story.