TL;DR: Validating data is not about types, it is about determining when and where the app encounters failures.

This article serves as a sequel to my previous article about Zod. Here, I’ll shift away from TypeScript and explore what is the principle behind data validation and what we should expect from it. This article’s focus is not Zod, but it should answer some questions raised in comments to my previous article.

1. “Never trust the backend”

While it might come across as a provocative joke, there is definitely a bit of truth in that quote.

Some developers shared to me their experience utilizing the types generated from the API itself. I firmly believe this is a good starting point to avoid most of the data related issues and bugs.

However, you should keep in mind that, if the backend API is being developed concurrently to the frontend :

- you may use an outdated version of the types

- backend data migration can fail, resulting in a mismatch between the data and the ORM structure

Both scenario can indeed lead to runtime issues.

Data validation can ensure, on runtime, the validity of any given object with a defined schema.

Having good types is not sufficient. There is always a possibility that the data doesn’t conform to the expected format. Hence, the necessity for data validation.

2. It is not about types

In my previous article, I demonstrated, with a TypeScript flaw, why it is essential to validate external data with Zod (or any validation library). But external data validation remains important whether you use TypeScript or not. In fact, TypeScript compile-time “validation” may have caused us to overlook the risks at runtime.

In the JavaScript times (before TypeScript), web developers used to implement basic protection by mapping the object structure as follows :

function parseUser(data) {

try {

return {

name: data.name,

email: data.email,

address: {

street: data.address.street,

}

}

} catch {

throw new Error('Invalid data');

}

}

This kind of function was used directly after fetching the data.

In a TypeScript code, data would be already typed and parseUser would output the exact same type. As a result, this entire snippet would seem useless.

While rudimentary, this piece of code is far from useless : It executes operations prone to crashing within a “controlled environment”. By retrieving all the data individually, we ensure that the expected structure is fulfilled. If any of the parameter is missing, it will fail. A great deal of crashes is thus avoided throughout the application, although there remains a chance to perform an invalid operation on a parameter itself.

This primitive example of data validation introduces an important principle : failing as soon as possible.

3. With validation comes errors

One misconception of data validation, is that it can reduce errors. The accurate perspective is that it can prevent crashes. In fact, properly executed data validation is likely to throw or display more errors.

No more silent bugs

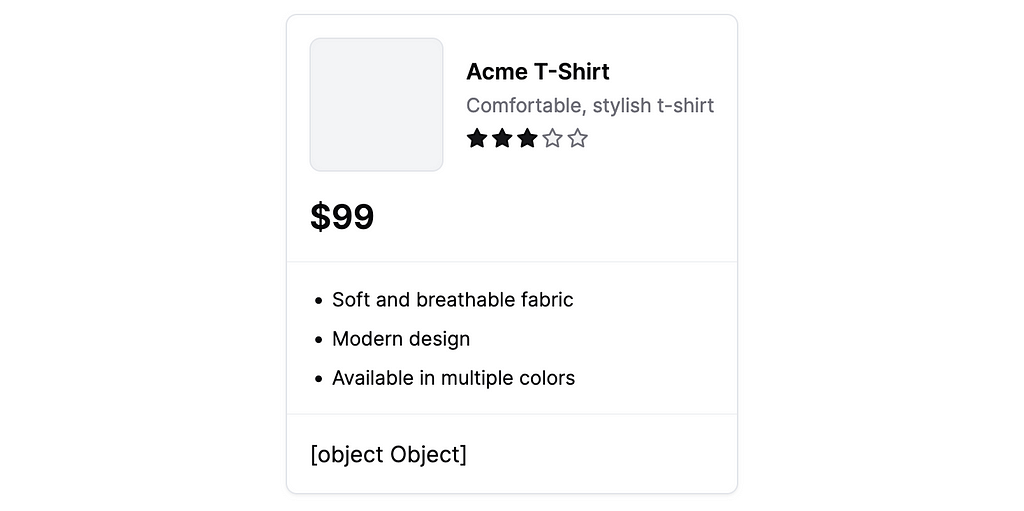

Sometimes, the application does not crash, but runs in a “degraded” mode. Some features will not work quite as intended, or the data will not be displayed properly.

With data validation, silent bugs becomes errors. As soon as the data is fetched, the application will ascertain whether the requirements are met for all the dependent screens to display and function properly.

If the requirements are not met, then the application can display a fallback or an error screen. Displaying the error immediately can prevent deceptive user experiences, such as forms leading to unexpected errors.

It is also possible to design a screen with a “degraded” variant, which can work with only a partial API response. Data validation enables better fallbacks for each situation.

Better error tracking

Along with enhancing UX, data validation also improves developer experience by streamlining the debugging process. Validating data just after fetching allows to gather two of the most error-prone operations in a single point in time and “in code”.

If these errors are properly tracked, labeled and logged in a monitoring service, a significant amount of time should be saved in investigations. We never need to ask ourselves again: Is it caused by data ?

4. “Never trust the backend”

(No, this is not an attempt at a running gag.)

Ajv has been suggested in comments on my previous article for data validation. It is real validation, which means all the benefits I exposed earlier. But, in my experience, this solution has a drawback :

There is a chance that the OpenAPI schemas do not match perfectly the actual implementation of the API. Maybe I've had bad luck, with OpenAPI schemas, but I would not always rely on those (depending on how they have been generated) to perform data validation.

Also, OpenAPI and Ajv are not ideal with TypeScript application. It requires updating the type declarations along with the OpenAPI schemas. They can be generated automatically, but I don’t like this approach. Instead of declaring the API structure twice, I prefer to think of the validation step with another perspective.

The data validation schemas must define the requirements of the frontend application. Of course, these requirements are not solely determined by the frontend. They can be the result of a concerted work between the backend and the frontend. Or, taking a public API as an example, the requirements will be the intersection of the frontend needs and the API implementation.

In other words, we should only validate what the application really needs.

Summary

Good type declarations are not enough, our applications must be prepared for unexpected external data. Data validation allows preventing crashes, and enables finer handling of errors. If tracked properly, this can improve debugging efficiency. It may be tempting to rely on the OpenAPI provided by the backend, but you should only validate what the application needs. For all these reasons, I suggest writing your own validation schemas with tools like Zod, keeping in mind the application’s requirements.

Zod: The truth about data validation was originally published in ekino-france on Medium, where people are continuing the conversation by highlighting and responding to this story.